Self-Driving Car Crash Statistics

Hiring a lawyer is the first step towards recovering a better quality of life. At Osborne & Francis, we devote our undivided attention to each client, and all communications are held in the strictest privacy. Contact us by filling out the form or calling us directly at (561) 293-2600.

By Pierce | Skrabanek

Published on:

August 29, 2025

Updated on:

September 8, 2025

.avif)

.avif)

Self-driving cars were introduced as a safer alternative to human drivers. They rely on sensors, cameras, and machine learning instead of distractions, fatigue, or poor decisions. Yet crashes continue to occur, and the numbers are climbing as more of these vehicles hit the road.

People have been struck while crossing intersections, rear-ended in traffic, or sideswiped during routine turns. In many cases, passengers relied on the vehicle’s automated system to take full control. Others misunderstood what the car was capable of doing. The result: injuries, confusion, and questions about responsibility.

Self-driving car accident statistics provide a starting point to understand where the technology stands. The data shared here focuses on the last three years, giving a current look at how these vehicles perform and what happens when they don’t.

Interested in what the numbers reveal? Here’s what the latest self-driving car crash statistics show about accidents, safety, and risk.

Did you suffer injuries in a self-driving car accident? Pierce Skrabanek can help you understand your legal options. Call (832) 690-7000 or send us a message online for a free case review.

What Counts as a “Self-Driving Car” Today?

When most people think of self-driving cars, they imagine vehicles without steering wheels or pedals. In reality, the vast majority of cars on the road today fall into partial or conditional automation. They assist the driver but do not replace them.

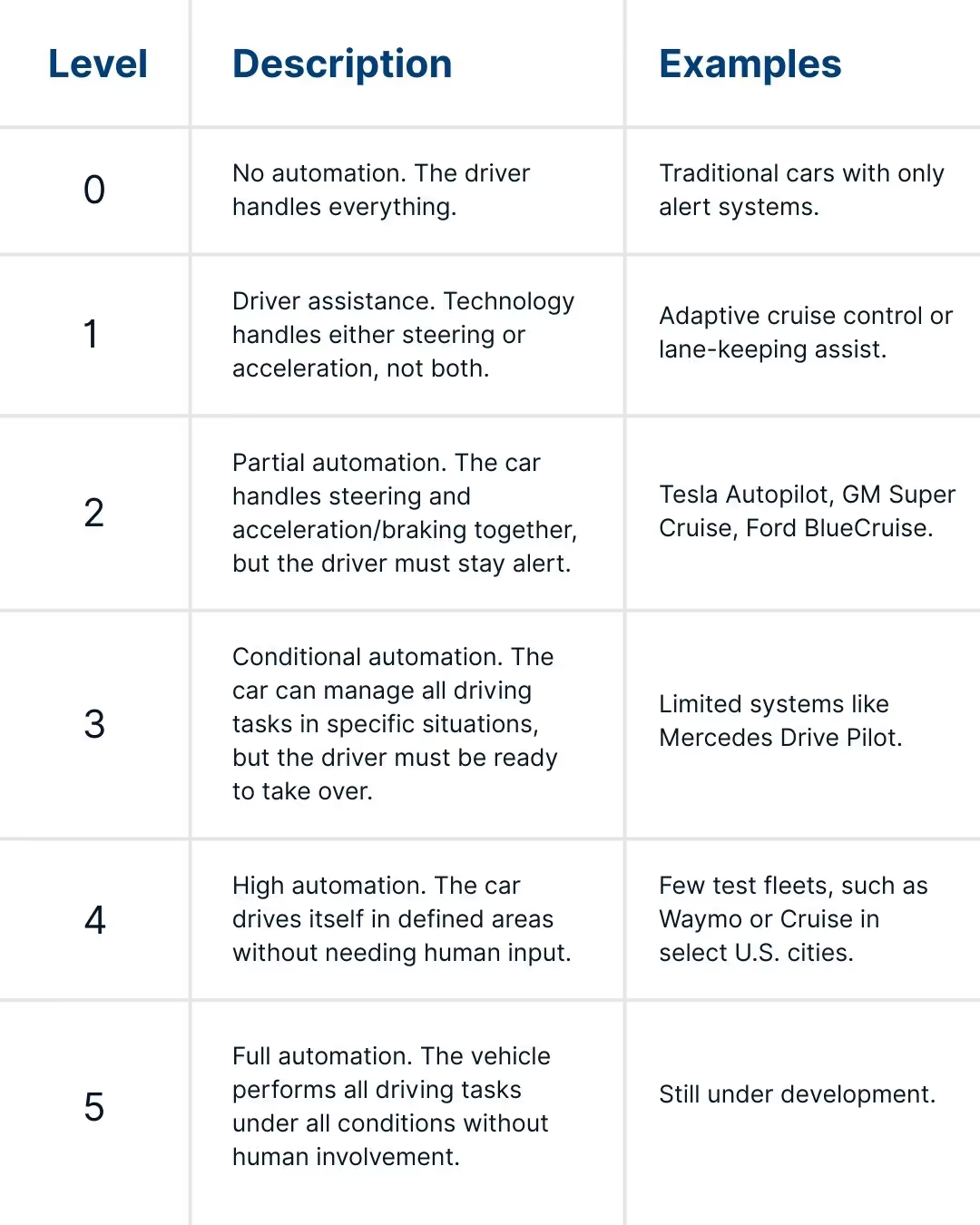

The Society of Automotive Engineers (SAE) defines six levels of driving automation, from Level 0 (no automation) to Level 5 (full autonomy).

Most cars on the road today use Level 2 systems, and a smaller number are testing Level 3. Tesla’s Autopilot and Ford’s BlueCruise are both examples of Level 2 features, which can help with steering and speed but still need the driver’s full attention.

The higher levels, 4 and 5, are not in regular use yet. They only show up in testing programs or limited trials in certain cities.

Understanding the level of automation is important when looking at self-driving car accident statistics because the type of system plays a big role in how accidents happen.

How Many Self-Driving Car Accidents Per Year?

Since June 2021, the National Highway Traffic Safety Administration (NHTSA) has required companies to report crashes involving cars with automation. Reports are split into two categories:

- ADS (Automated Driving Systems): Higher-level automation (Levels 3–5), where the vehicle can perform most or all driving tasks under certain conditions. Examples include Waymo and Cruise robotaxis.

- Level 2 ADAS (Advanced Driver Assistance Systems): Lower-level automation (Level 2), where the car can steer, brake, and accelerate but still requires the driver’s full attention. Examples include Tesla Autopilot, GM Super Cruise, and Ford BlueCruise.

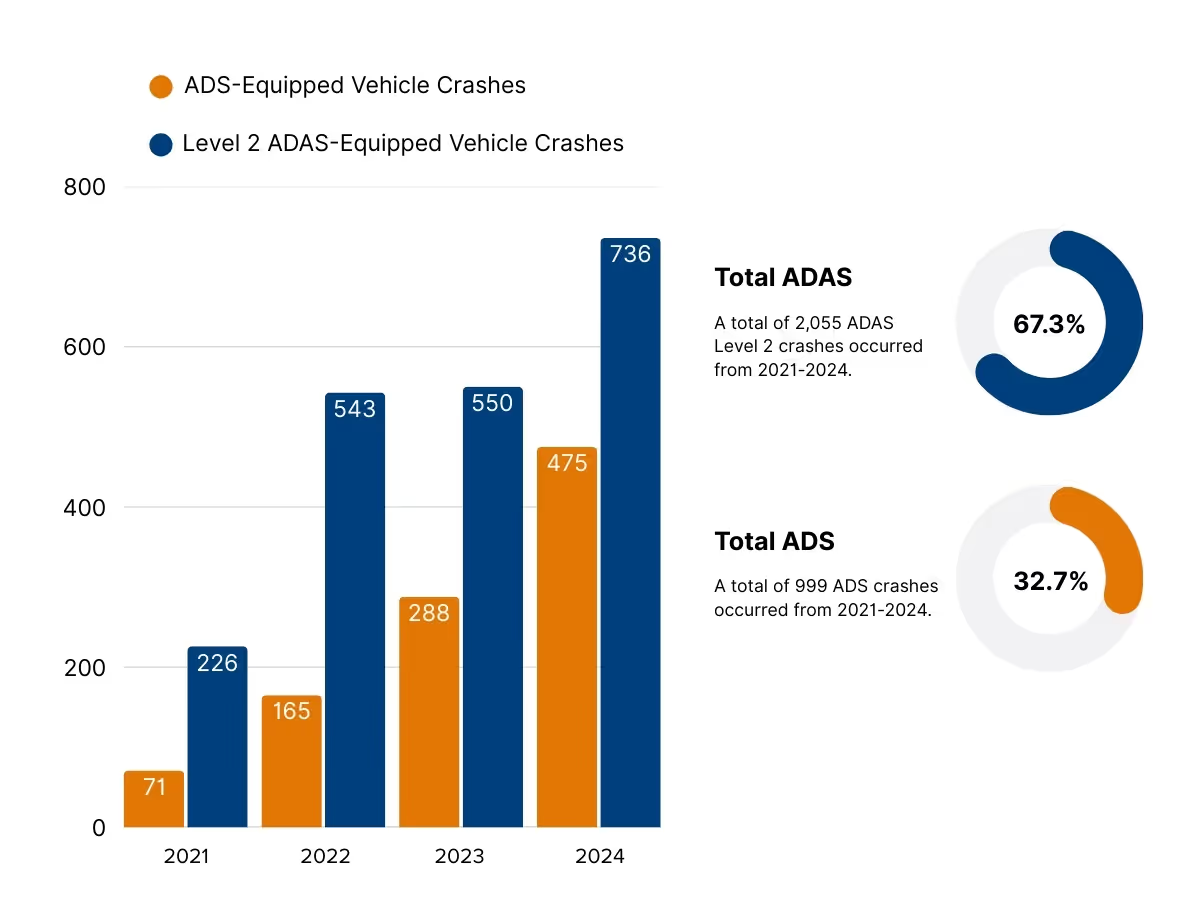

Here’s how many self-driving cars have crashed based on data from 2021–2024:

So, most reported incidents involve Level 2 driver-assist systems like Tesla Autopilot and Ford BlueCruise, rather than fully autonomous test vehicles.

From 2021 to 2024, Waymo reported the most ADS crashes (630 total). For Level 2 vehicles, Tesla reported the most (1,745 total).

As of early 2025, the California DMV has logged 781 autonomous vehicle collision reports, more than any other state. Texas and Arizona follow with high numbers as well, since both host large testing programs.

These numbers show that as self-driving cars become more common, several issues still need to be worked out:

- Drivers sometimes trust the car to do more than it can handle.

- The software may fail to recognize certain hazards.

- When the system suddenly hands control back to the driver, people are caught off guard.

If you or a loved one was hurt in a self-driving car accident, Pierce Skrabanek can help you understand who may be responsible and guide you through the claims process. Call (832) 690-7000 or contact us online to speak with a car accident attorney.

What Causes These Crashes?

Crash investigations show that self-driving cars can fail in ways that put people at risk. Vehicles may follow traffic incorrectly, misread signals, or overlook pedestrians and cyclists.

The most common scenarios reported in self-driving car accidents include:

- Rear-end collisions while using adaptive cruise or lane-keeping features,

- Sudden braking triggered by misinterpreted sensor data,

- Lane departures caused by unclear or faded road markings,

- Collisions at intersections where the system failed to yield or stop,

- Accidents involving pedestrians or cyclists outside sensor range, and

- Multi-car pileups when abrupt maneuvers trigger chain-reaction crashes.

The self-driving car accident rate remains difficult to measure because these vehicles are still limited in number. Federal safety offices continue to collect reports, and each new case helps build a clearer picture of how the technology performs in daily traffic.

Do Self-Driving Cars Make Roads Safer?

Manufacturers promote self-driving technology as a way to reduce the errors that cause most crashes. Unlike people, a machine does not text behind the wheel, get tired, or drive drunk. That promise drives much of the investment in autonomous vehicles.

At the same time, the current transition period brings risks of its own. These vehicles must share the road with human drivers, pedestrians, cyclists, and unpredictable situations that do not always match their programming.

Key challenges include:

- Sensor and software issues: Faulty readings or misjudgments can cause vehicles to miss hazards or react incorrectly.

- Driver overreliance: People sometimes believe the system can do more than it actually can, leading to distraction or delayed reactions.

- Cybersecurity risks: Like other connected devices, self-driving cars face the possibility of hacking or system compromise.

- Road and weather conditions: Heavy rain, faded lane lines, or construction zones make it harder for systems to navigate safely.

- Mixed traffic dynamics: Automated cars behave cautiously, while human drivers often act aggressively, creating friction that can lead to collisions.

Independent research paints a mixed picture. A 2023 Insurance Institute for Highway Safety study found that some Level 2 systems reduced rear-end collisions, but they did not eliminate risk overall. A 2024 National Safety Council review noted no clear reduction in crash severity where self-driving cars are more common.

So far, the data shows progress in narrow areas but no broad proof that the technology has made roads safer overall.

Reported Crashes by Major Self-Driving Car Companies

Hundreds of self-driving car crashes have been reported through federal databases and company filings since 2021. These records show patterns that connect directly to specific developers and test programs.

- Waymo (Alphabet/Google): Waymo vehicles in Phoenix, San Francisco, and Los Angeles have been involved in collisions ranging from minor scrapes to more serious crashes at intersections. Reports also describe cases where cars stalled in traffic or stopped abruptly, creating hazards for nearby drivers.

- Cruise (General Motors): Cruise cars in San Francisco faced repeated scrutiny after incidents where vehicles blocked lanes, failed to yield to emergency responders, or made sudden unpredictable stops. Regulators suspended operations after pedestrian-related crashes raised urgent safety concerns.

- Tesla: Tesla’s Autopilot and Full Self-Driving (FSD) Beta remain the most widely used driver-assist systems. Federal crash data links hundreds of accidents to Teslas operating in Level 2 mode, including rear-end collisions and lane departures where drivers placed too much trust in the software.

- Other Companies: Smaller fleets operated by Aurora, Zoox, and Motional have also reported crashes, though at lower volumes since their cars remain limited to pilot programs.

While the total number of self-driving car accidents remains far below those caused by human drivers, these company-specific reports highlight how often problems arise when technology meets everyday traffic.

Injuries and Fatalities Involving Self-Driving Systems

Most crashes tied to automated driving systems don’t cause serious injury. But when these vehicles are involved in crashes, the results can be tragic.

A Tesla in Autopilot mode crashed into a fire truck in Walnut Creek, California in February 2023. The driver was killed, and several firefighters were injured. Federal investigators later confirmed that driver-assist technology was active at the time of the crash.

The following year, Ford’s BlueCruise system was linked to two separate fatal crashes.The first occurred in February in San Antonio, when a Mustang Mach-E using BlueCruise struck and killed the driver of a stopped CR-V. One month later near Philadelphia, another BlueCruise vehicle hit a stopped car and two pedestrians on Interstate 95, killing all three. Both collisions happened at night while the driver-assist system was active.

Cruise’s robotaxi program also drew national attention in October 2023 when one of its vehicles dragged a San Francisco pedestrian who had already been hit by another car. The robotaxi’s rear wheel stopped on the victim’s legs, leaving the person with severe injuries.

Still, statistics on injuries and deaths involving self-driving cars remain limited. Police reports don’t always note whether automated systems were active, and reporting standards vary. Federal safety officials now ask self-driving care makers to include clearer information about automation when crash reports are submitted.

Who Is Responsible After a Self-Driving Car Accident?

When a self-driving car is involved in a crash, figuring out who is responsible is rarely simple. In a traditional accident, fault usually falls on one of the drivers. With automated systems, liability may shift between the person behind the wheel, the automaker, the software provider, or the company operating the fleet.

It may involve:

- A driver who failed to take control when required,

- A software error that misinterpreted a scenario, or

- A hardware defect in sensors or braking systems.

Insurance companies and courts are still navigating these issues. As of 2025, no uniform national standard defines who is at fault when an automated system fails. That means each case can play out differently depending on the state, the evidence, and the type of vehicle involved.

For victims, this creates extra challenges. Claims may involve multiple parties, from car manufacturers and tech developers to fleet operators running robotaxi services. Sorting through those layers of responsibility often requires legal support.

The Future of Self-Driving Car Rules

People still argue about whether self-driving cars are safer than human drivers. Current research shows they crash more frequently—about 9.1 accidents per million miles, compared to 4.1 accidents per million miles for regular cars. The difference is that injuries in self-driving car crashes are usually less severe.

Supporters say these cars have faster reaction times because they use sensors and computers instead of human judgment. But the data is still limited, and it’s too early to say if they truly make roads safer.

Lawmakers are starting to respond by setting new rules, including:

- Requiring companies to file detailed crash reports,

- Creating clearer labels so drivers know what the systems can and can’t do, and

- Setting safety standards that cars must meet before wide release

The self-driving car crash statistics collected between 2022 and 2025 will guide the next round of decisions. Leaders want to make sure the technology can grow without putting drivers, passengers, or pedestrians at greater risk.

For now, self-driving cars bring both promise and uncertainty. As the technology evolves, questions of safety, responsibility, and accountability will remain at the center of the conversation. That’s also where injured drivers and passengers will need strong advocates to protect their rights.

Injured in a Self-Driving Car Accident? We Are Ready to Help

Accidents involving self-driving cars raise complicated questions about who is responsible. Was the driver expected to step in? Did the vehicle’s software fail? Or did the manufacturer release unsafe technology onto public roads? For victims, the answers matter because they determine who pays for medical bills, lost wages, and long-term recovery.

At Pierce Skrabanek, we have been standing up for the injured for more than 30 years. Our firm has recovered over $500 million in verdicts and settlements on behalf of clients, and we bring that same dedication to cases involving self-driving cars. When advanced technology and public safety collide, we know how to build the evidence, work with experts, and hold powerful companies accountable.

If you were hurt in a crash involving a self-driving car, call (832) 690-7000 or reach out online to schedule a free case review.